(Warning: Long Post. TL;DR summary will come soon! I did make a mind-map for each session - scroll right to the bottom of the post to get them)

I attended the Velocity 2012 Conference on the 2nd October. I just signed up for the tuesday tutorials: x4 90-minute topics due to time constraints and workload. Some of my colleagues attended the entire 3-day event. However, I found that the 90-minute sessions had the right amount of depth and breadth to keep me interested.

This is my first Velocity conference, a gathering aimed squarely at the web performance and operations crowd: absolutely perfect for my current job role and my team. Wonder why I never heard of it until now?

Here's the schedule list.

Monitoring and Observability ( Theo Schlossnagle from OMNITI)

This session started with the simple facts that you can’t fix what you can’t see and you can’t improve what you can’t measure. The difference of the two terms was also important: observation is the passive observation of data providing trends and baseline; while monitoring is the direct observation of failure in real-time.

It's difficult to describe and put together a process for trouble-shooting issues but Theo does a great job in weaving together the need for appropriate tools, flexibility, experience and processes to assist with the whole technique of M & O.

My key points (all pretty sensible but worth noting down):

Escalating Scenarios: A Deep Dive Into Outage Pitfalls (John Allspaw from Etsy.com) A very interesting session: who would have thought that an outage could be so interesting. The key point was that when things go wrong, our judgement is clouded at best, blinded at worst. Successfully navigating a large-scale outage, being aware of potentials gaps in knowledge and context can help make for a better outcome.

People and their responses and understanding them was the first item of discussion and communication is key. In fact, verbal face-to-face is the best with a complex sentence making its way through a chain of a dozen people in a little under 8 seconds. Using IRC or email took about ten times as long, losing context and any other supporting information.

Key points for human responses:

Human pitfalls include:

Scenarios can escalate along a path:

1. L1 - Perception illusion. Everything 'looks' normal, just noisy. Nothing to worry about.

2. L2 - Comprehension. The mental model of the observer takes over, their own (mis-)understanding now clouds the issue.

3. L3 - Projecting. The scar tissue of past outages dictate how people react.

Alerts can have multiple views, can be too sensitive leading to false alarms and the developing of an attitutde of ignoring and questioning the alert when it does happen. Example: Peter crying wolf?

However, trusted alerts should lead to rapid decision making.

Communication however is key with a mix of tested decision making processes in place.

A Web Perf Dashboard: Up & Running in 90 Minutes (Jeroen Tjepkema from MeasureWorks)

This session covered the essentials of building a useable, visually pleasing web performance dashboard.

Aimed squarely at meeting the requirement of passing useful information to the end-user who may not be a technical person but who has to have the correct information to make decisions. So design was extremely important: collecting useful data has it's own challenges but not being able to communicate this to the end-user means the exercise does not meet its full potential.

Key points over design:

Workflow Patterns and Anti-Patterns for Operations (Stephen Nelson-Smith from Atalanta Systems Ltd)

This last session, in my view was the best due to the speaker, his style and the subject matter. I also learnt about the Pomodoro (and here is the Wiki entry for it!) - which I have been applying successfully to my work schedule. It resolves around successful patterns or mental state when acquiring new skills and habits for successful work flows.

A key part of successful patterns in operations in understanding the mindsets. They can be 'ad-hoc', 'Analytical', 'Synergistic' and 'Chaordal' (a mix of chaos and order - new to me!) - the ideal is to move to the last chaordal mindset where there's process and flexibility in equal measure that produces an efficient and effective work place.

One theme therefore is to capture committments clearly: how can one easily visualise your workload. options include using a sticky on a whiteboard, or a ticketting system and sharing these committments with everyone and not just your own team. Understanding that there is a threshold and beyond which, effectiveness and efficiency drops. As soon as you reach it, use a HOOTER! (no really) or some other cue. When tasks are completed, have a reward or at least a recognitition that it the task is completed. Broadcasting risks and dependencies is important in opening up the issue.

A successful workflow pattern therefore has a number of certain characteristics. They are:

Some other tidbits:

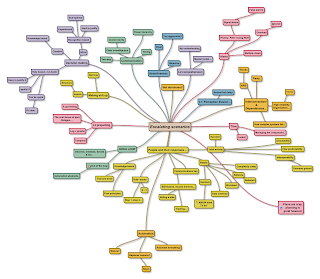

Mind Map Summaries for the four sessions are below:

I attended the Velocity 2012 Conference on the 2nd October. I just signed up for the tuesday tutorials: x4 90-minute topics due to time constraints and workload. Some of my colleagues attended the entire 3-day event. However, I found that the 90-minute sessions had the right amount of depth and breadth to keep me interested.

This is my first Velocity conference, a gathering aimed squarely at the web performance and operations crowd: absolutely perfect for my current job role and my team. Wonder why I never heard of it until now?

Here's the schedule list.

|

| My list for the day. |

Monitoring and Observability ( Theo Schlossnagle from OMNITI)

This session started with the simple facts that you can’t fix what you can’t see and you can’t improve what you can’t measure. The difference of the two terms was also important: observation is the passive observation of data providing trends and baseline; while monitoring is the direct observation of failure in real-time.

It's difficult to describe and put together a process for trouble-shooting issues but Theo does a great job in weaving together the need for appropriate tools, flexibility, experience and processes to assist with the whole technique of M & O.

My key points (all pretty sensible but worth noting down):

- Baselines are priceless - how do you know something isn't working when you have no idea what the state of the world is?

- Periodicity of cycles

- Where's the breakpoint?

- Control groups are not easy to put into place, though desireable.

- Cannot correct what we cannot measure.

- LEARN YOUR TOOLS!

- Visualisation of data is very important. Tables are boring.

- Collection and storage is impotant, big data andl lots of it.

- Resources are cheap, hence quality control is poor.

- Contrast to when one had only 4K of RAM to programme in!

- Understanding the architecture is important

Escalating Scenarios: A Deep Dive Into Outage Pitfalls (John Allspaw from Etsy.com) A very interesting session: who would have thought that an outage could be so interesting. The key point was that when things go wrong, our judgement is clouded at best, blinded at worst. Successfully navigating a large-scale outage, being aware of potentials gaps in knowledge and context can help make for a better outcome.

People and their responses and understanding them was the first item of discussion and communication is key. In fact, verbal face-to-face is the best with a complex sentence making its way through a chain of a dozen people in a little under 8 seconds. Using IRC or email took about ten times as long, losing context and any other supporting information.

Key points for human responses:

- Skill-based, muscle memory. This is akin to riding a bike, it is a learned reflex and difficult to unlearn. Developed through training and repetition. Fight or flight?

- Rule-based. Process and list driven. Step 1, then step 2 followed by step 3. Apollo 13!

- Knowledge-based. Using first principles and based on trial and error. Gut instinct (though that could also be muscle memory.

Human pitfalls include:

- Heroism. I KNOW what I need to do and therefore I know best. Solo work and solo fix. No information sharing.

- Data overload - too much information to process and too many decision trees leads to indecision. A decision is better than no decision (usually).

- Plans are crap. Planning is great.

Scenarios can escalate along a path:

1. L1 - Perception illusion. Everything 'looks' normal, just noisy. Nothing to worry about.

2. L2 - Comprehension. The mental model of the observer takes over, their own (mis-)understanding now clouds the issue.

3. L3 - Projecting. The scar tissue of past outages dictate how people react.

Alerts can have multiple views, can be too sensitive leading to false alarms and the developing of an attitutde of ignoring and questioning the alert when it does happen. Example: Peter crying wolf?

However, trusted alerts should lead to rapid decision making.

Communication however is key with a mix of tested decision making processes in place.

A Web Perf Dashboard: Up & Running in 90 Minutes (Jeroen Tjepkema from MeasureWorks)

This session covered the essentials of building a useable, visually pleasing web performance dashboard.

Aimed squarely at meeting the requirement of passing useful information to the end-user who may not be a technical person but who has to have the correct information to make decisions. So design was extremely important: collecting useful data has it's own challenges but not being able to communicate this to the end-user means the exercise does not meet its full potential.

Key points over design:

- Communication of KPI required. So that everyone understands what the dashboard is trying to communicate.

- Visually pleasing and easy to understanding: so heatmaps, percentages and simple colours are key.

- Design principle is functionality and attractiveness.

- What information are you trying to communicate: trends, service levels, real-time?

- Should be in three parts: 1. Metric (e.g SLA), 2. Real-time (animated of user levels) and 3. Business impact (successfully transactions).

Synthetic testing versus real user monitoring (RUM). Two methods of collecting data that can be turned into information. The former is automated, monitors on a regular basis and only detects what you measure. Mimics business processes and can be useful for baseline creation. Tools for synthetic testing include: Monitis and SiteConfidence. RUM collectes data on what the user is doing, how they are interacting with your services and can highlight poor design or lack of 'stickiness' in certain areas of the site. Tools for RUM include: Keynote, Google analytics, Soasta and Torbit. The tools are not mutually exclusive and many can collect both types of data.

Workflow Patterns and Anti-Patterns for Operations (Stephen Nelson-Smith from Atalanta Systems Ltd)

This last session, in my view was the best due to the speaker, his style and the subject matter. I also learnt about the Pomodoro (and here is the Wiki entry for it!) - which I have been applying successfully to my work schedule. It resolves around successful patterns or mental state when acquiring new skills and habits for successful work flows.

|

| Overleaning and how it relates to challenge level and skill level. |

A key part of successful patterns in operations in understanding the mindsets. They can be 'ad-hoc', 'Analytical', 'Synergistic' and 'Chaordal' (a mix of chaos and order - new to me!) - the ideal is to move to the last chaordal mindset where there's process and flexibility in equal measure that produces an efficient and effective work place.

One theme therefore is to capture committments clearly: how can one easily visualise your workload. options include using a sticky on a whiteboard, or a ticketting system and sharing these committments with everyone and not just your own team. Understanding that there is a threshold and beyond which, effectiveness and efficiency drops. As soon as you reach it, use a HOOTER! (no really) or some other cue. When tasks are completed, have a reward or at least a recognitition that it the task is completed. Broadcasting risks and dependencies is important in opening up the issue.

A successful workflow pattern therefore has a number of certain characteristics. They are:

- A name.

- An opening story. Narrative is important.

- A summary.

- A rationale.

- A context.

- The problem. Preferably one sentence definition. Difference between expectation.

- Forces acting for and against it.

- Known issues.

- Resulting context.

Some other tidbits:

- The pros and cons of various ticket/task/story workflows

- Kanban

Mind Map Summaries for the four sessions are below:

| ||

| Escalating Scenarios |

|

| Monitoring and Observation |

|

| Workflow patterns |

No comments:

Post a Comment